AI in Health & Life Sciences — Industry Use Cases

How ETHORITY applies Ethical AI & Healing Intelligence in Health & Life Sciences.

From precision oncology to motion-adaptive radiotherapy, ETHORITY pioneers frameworks and platforms that align AI innovation with ethics, compliance, and patient outcomes.

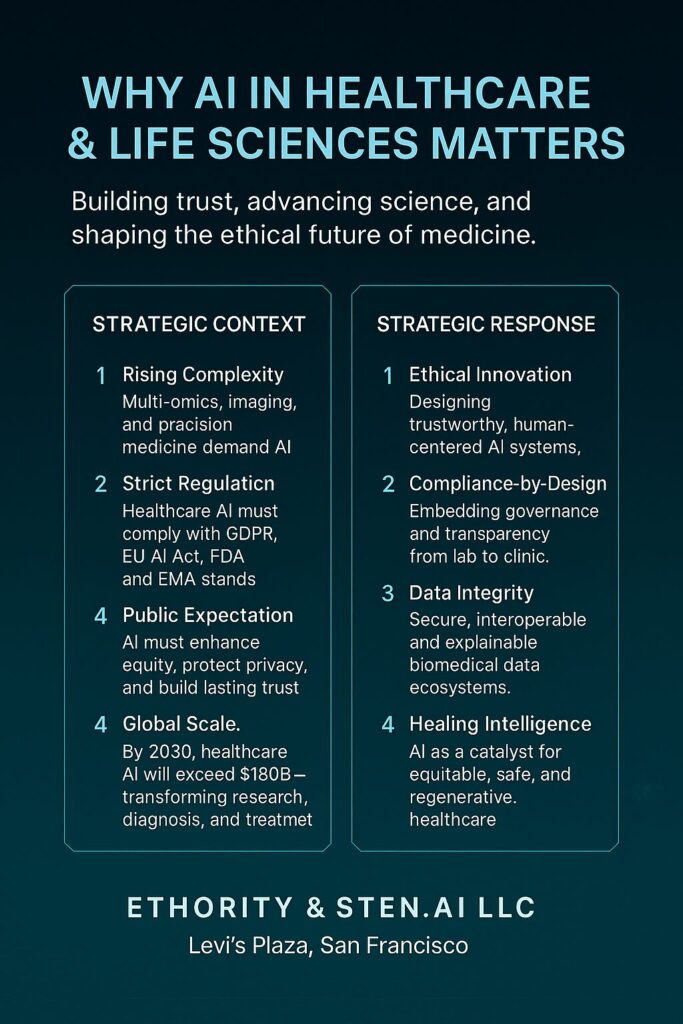

Why AI in Healthcare & Life Sciences Matters

Rising complexity in medical AI (multi-omics, imaging, epigenetics).

Strict compliance requirements (GDPR, EU AI Act, FDA, EMA).

Societal expectation → AI must protect patients, improve health equity, and build trust.

By 2030, global healthcare AI is projected to exceed $180 billion (WHO & MarketsandMarkets). Genomics, single-cell sequencing, and precision radiotherapy will be mainstream. ETHORITY drives ethical biomedical intelligence with EpiTrace.AI (epigenetic lineage decoding) and BeamGuide.AI (adaptive radiotherapy) — ensuring compliance, transparency, and healing outcomes.

The Questions To be Asked

How do we ensure AI in diagnostics and treatment is explainable and compliant by 2030?

As AI advances in clinical decision-making, transparency and traceability become crucial for EU AI Act compliance. Hospitals must adopt explainable models, human-in-the-loop validation, and auditable workflows to maintain patient safety and trust.Who owns biological intelligence — the patient, the researcher, or the platform?

The rise of AI-driven bioinformatics poses a challenge to traditional data ownership. Ethical frameworks must evolve to balance intellectual property, informed consent, and patients’ rights over genomic and epigenetic data.How do we protect patient dignity and consent under GDPR and the EU AI Act’s high-risk classifications?

Medical AI systems are classified as “high-risk” under the EU AI Act. Ensuring lawful data use requires explicit consent, privacy-by-design infrastructure, and interoperable audit trails for clinical AI.What frameworks align AI with public trust, fairness, and human rights?

Global health AI must adhere to frameworks such as UNESCO’s AI Ethics, OECD AI Principles, and ETHORITY’s TrustAI model — embedding fairness, accountability, and explainability in every algorithmic layer.

Facts & Latest Research (2025–2030 Horizon)

Global AI in healthcare market projected to reach USD 36.7 B in 2025, expanding at ~38.6% CAGR through 2030

Grand View Research

AI’s rapid expansion in diagnostics, drug discovery, and hospital operations is driven by breakthroughs in computer vision, NLP, and federated learning, with the market expected to exceed USD 100 B by 2030.By 2025, AI decision-support tools become mainstream in hospitals, offering real-time evidence-based guidance

Boston Consulting Group (BCG)

BCG projects that clinical decision-support AI will be widely integrated into hospital systems, improving accuracy and efficiency through predictive diagnostics and treatment optimization.Generative AI in healthcare valued at USD 2.79 B in 2025, forecast to grow to nearly USD 40 B by 2034

Research Nester

The next decade will see generative AI drive personalized medicine, drug discovery, and clinical documentation automation, reshaping the entire medical R&D pipeline.MIT’s 2025 model reduces annotation effort in clinical imaging by 70%, accelerating trial workflows

MIT News

MIT researchers developed a self-supervised AI model that minimizes manual data labeling in medical imaging, significantly speeding up clinical trials and diagnostic pipeline validation.Stanford warns of bias and reliability risks in AI mental health chatbots

Stanford News

Stanford’s 2025 study cautions that unregulated AI chatbots may introduce bias, misinformation, and dependency risks, underscoring the need for ethical design and clinical oversight in digital mental health tools.AI in primary healthcare shows high potential — but governance and interoperability remain unsolved

BMC Primary Care

A 2024 BMC study concludes that while AI can improve efficiency and accessibility in primary care, the lack of standardized governance and interoperability frameworks limits scalability and trust.

ETHORITY AI Use Cases

Key Use Cases

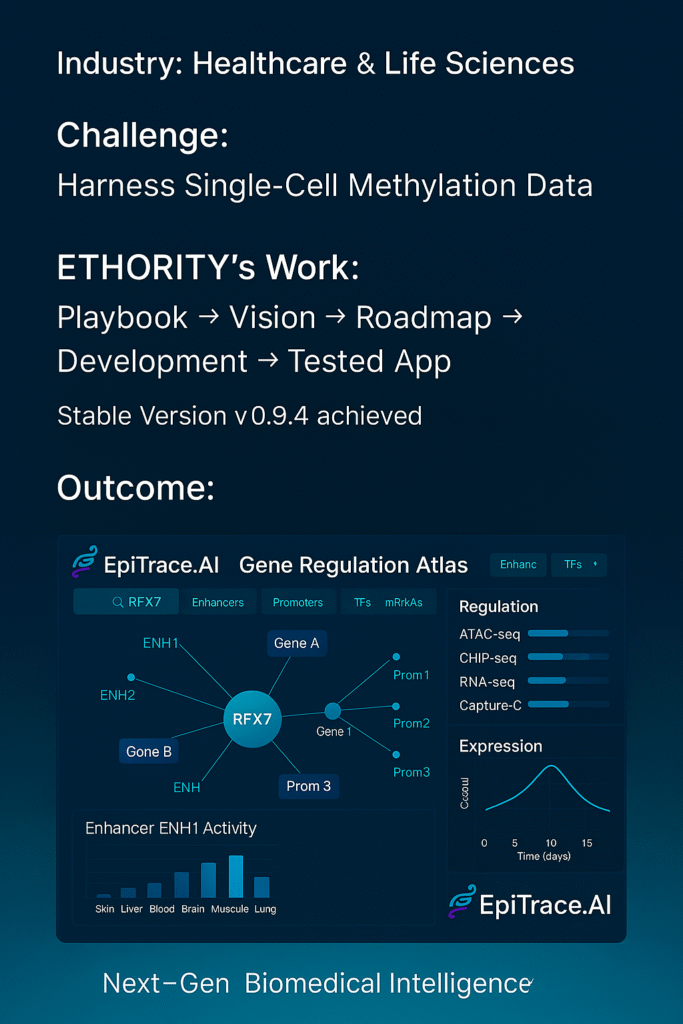

EpiTrace.AI → lineage tracing, relapse prediction, biomarker discovery

BeamGuide.AI → adaptive radiotherapy with sub-millimeter accuracy

Predictive Healthcare Analytics → hospital capacity, chronic disease forecasting

Multi-omics Integration → genomic, epigenetic, imaging data fused with AI

Compliance Landscape

EU AI Act → healthcare diagnostics classified as high-risk

GDPR → strict data sovereignty requirements for biomedical data

WHO AI in Health principles → safety, explainability, inclusiveness

OECD & UNESCO frameworks → fairness, accountability, transparency

Challenges

Bias in biomedical training data

Interoperability across health systems

Explainability in clinical decision support

Latest Models, Data & Tools (Experience Hub)

Models: Variational Autoencoders (VAEs), Transformer models for lineage tracing, AI-1st OS frameworks for governance.

Data: Single-cell methylation, genomic sequencing, clinical trial datasets.

Tools:

EpiTrace.AI app → demo lineage tracing dashboard

EthiScope™ → compliance guardrail testing in biomedical AI

Assure Certification → tiered proof of compliance (Bronze, Silver, Gold)

CTA: → Explore ETHORITY Tools

Outcomes for Healthcare Clients

Compliance-ready platforms for research & clinical use

Improved patient outcomes with predictive & adaptive AI

Trust by design → explainability, fairness, and transparency

Global collaboration with DKFZ, foundations, regulators, and innovators

Why ETHORITY Solutions?

-

End-to-end lifecycle coverage → from readiness to certification.

-

Compliance by design → EU AI Act, OECD, UNESCO standards embedded.

-

Ethics at the core → fairness, transparency, explainability.

-

Healing Intelligence → unique platforms that extend AI into biomedical and planetary impact.